I was wondering what this ‘grad_norm’ parameter is. I got sidetracked by the paper with this name, which is maybe even unrelated. I haven’t gone to the Tune website yet to check if it’s the same thing. The grad_norm I was looking for is in the ARS code.

https://github.com/ray-project/ray/blob/master/rllib/agents/ars/ars.py

| info = { | |

| “weights_norm”: np.square(theta).sum(), | |

| “weights_std”: np.std(theta), | |

| “grad_norm”: np.square(g).sum(), | |

| “update_ratio”: update_ratio, | |

| “episodes_this_iter”: noisy_lengths.size, | |

| “episodes_so_far”: self.episodes_so_far, | |

| } |

So, it’s the sum of the square of g… and g is… the total of the ‘batched weighted sum’ of the ARS perturbation results, I think… but it’s not theta… which is the policy itself. Right, so the policy is the current agent/model brains, and g would be the average of the rollout tests, and so grad_norm, as a sum of squares is like the square of variance, so it’s a sort of measure of how much weights are changing, i think.

https://en.wikipedia.org/wiki/Partition_of_sums_of_squares – relevant?

# Compute and take a step.

g, count = utils.batched_weighted_sum(

noisy_returns[:, 0] - noisy_returns[:, 1],

(self.noise.get(index, self.policy.num_params)

for index in noise_idx),

batch_size=min(500, noisy_returns[:, 0].size))

g /= noise_idx.size

# scale the returns by their standard deviation

if not np.isclose(np.std(noisy_returns), 0.0):

g /= np.std(noisy_returns)

assert (g.shape == (self.policy.num_params, )

and g.dtype == np.float32)

# Compute the new weights theta.

theta, update_ratio = self.optimizer.update(-g)

# Set the new weights in the local copy of the policy.

self.policy.set_flat_weights(theta)

# update the reward list

if len(all_eval_returns) > 0:

self.reward_list.append(eval_returns.mean())

def batched_weighted_sum(weights, vecs, batch_size):

total = 0

num_items_summed = 0

for batch_weights, batch_vecs in zip(

itergroups(weights, batch_size), itergroups(vecs, batch_size)):

assert len(batch_weights) == len(batch_vecs) <= batch_size

total += np.dot(

np.asarray(batch_weights, dtype=np.float32),

np.asarray(batch_vecs, dtype=np.float32))

num_items_summed += len(batch_weights)

return total, num_items_summedWell anyway. I found another GradNorm.

GradNorm

https://arxiv.org/pdf/1711.02257.pdf – “We present a gradient normalization (GradNorm) algorithm that automatically balances training in deep multitask models by dynamically tuning gradient magnitudes.”

Conclusions

We introduced GradNorm, an efficient algorithm for tuning loss weights in a multi-task learning setting based on balancing the training rates of different tasks. We demonstrated on both synthetic and real datasets that GradNorm improves multitask test-time performance in a variety of

scenarios, and can accommodate various levels of asymmetry amongst the different tasks through the hyperparameter α. Our empirical results indicate that GradNorm offers superior performance over state-of-the-art multitask adaptive weighting methods and can match or surpass the performance of exhaustive grid search while being significantly

less time-intensive.

Looking ahead, algorithms such as GradNorm may have applications beyond multitask learning. We hope to extend the GradNorm approach to work with class-balancing and sequence-to-sequence models, all situations where problems with conflicting gradient signals can degrade model performance. We thus believe that our work not only provides a robust new algorithm for multitask learning, but also reinforces the powerful idea that gradient tuning is fundamental for training large, effective models on complex tasks.

…

The paper derived the formulation of the multitask loss based on the maximization of the Gaussian likelihood with homoscedastic* uncertainty. I will not go to the details here, but the simplified forms are strikingly simple.

(^ https://towardsdatascience.com/self-paced-multitask-learning-76c26e9532d0)

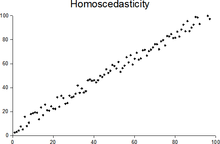

*

In statistics, a sequence (or a vector) of random variables is homoscedastic /ˌhoʊmoʊskəˈdæstɪk/ if all its random variables have the same finite variance. This is also known as homogeneity of variance. The complementary notion is called heteroscedasticity.