In the beginning there’s this hilarious intro to the Swedish Housewives Association or something. I’m imagining like some footage of showing pictures of 3d bot models to chickens or something equally silly, and making very serious notes about this. Recommend watching anyway.

DeepAI APIs

I made this at https://deepai.org/machine-learning-model/fast-style-transfer

Hehe cool.

There’s a lot of them. Heh Parsey McParseface API https://deepai.org/machine-learning-model/parseymcparseface

[

{

"tree": {

"ROOT": [

{

"index": 1,

"token": "What",

"tree": {

"cop": [

{

"index": 2,

"token": "is",

"pos": "VBZ",

"label": "VERB"

}

],

"nsubj": [

{

"index": 4,

"token": "meaning",

"tree": {

"det": [

{

"index": 3,

"token": "the",

"pos": "DT",

"label": "DET"

}

],

"prep": [

{

"index": 5,

"token": "of",

"tree": {

"pobj": [

{

"index": 6,

"token": "this",

"pos": "DT",

"label": "DET"

}

]

},

"pos": "IN",

"label": "ADP"

}

]

},

"pos": "NN",

"label": "NOUN"

}

],

"punct": [

{

"index": 7,

"token": "?",

"pos": ".",

"label": "."

}

]

},

"pos": "WP",

"label": "PRON"

}

]

},

"sentence": "What is the meaning of this?"

}

]

Some curated research too, https://deepai.org/research – one article https://arxiv.org/pdf/2007.05558v1.pdf showing that deep learning is too resource intensive.

Conclusion

The explosion in computing power used for deep learning models has ended the “AI winter” and set new benchmarks for computer performance on a wide range of tasks. However, deep learning’s prodigious appetite for computing power imposes a limit on how far it can improve performance in its current form, particularly in an era when improvements in hardware performance are slowing. This article shows that the computational limits of deep learning will soon be constraining for a range of applications, making the achievement of important benchmark milestones impossible if current trajectories hold. Finally, we have discussed the likely impact of these computational limits: forcing Deep Learning towards less computationally-intensive

methods of improvement, and pushing machine learning towards techniques that are more computationally-efficient than deep learning.

Yeah, well, the neocortex has like 7 “hidden” layers, with sparse distributions, with voting / normalising layers. Just a 3d graph of neurons, doing some wiggly things.

I was wondering what this ‘grad_norm’ parameter is. I got sidetracked by the paper with this name, which is maybe even unrelated. I haven’t gone to the Tune website yet to check if it’s the same thing. The grad_norm I was looking for is in the ARS code.

https://github.com/ray-project/ray/blob/master/rllib/agents/ars/ars.py

| info = { | |

| “weights_norm”: np.square(theta).sum(), | |

| “weights_std”: np.std(theta), | |

| “grad_norm”: np.square(g).sum(), | |

| “update_ratio”: update_ratio, | |

| “episodes_this_iter”: noisy_lengths.size, | |

| “episodes_so_far”: self.episodes_so_far, | |

| } |

So, it’s the sum of the square of g… and g is… the total of the ‘batched weighted sum’ of the ARS perturbation results, I think… but it’s not theta… which is the policy itself. Right, so the policy is the current agent/model brains, and g would be the average of the rollout tests, and so grad_norm, as a sum of squares is like the square of variance, so it’s a sort of measure of how much weights are changing, i think.

https://en.wikipedia.org/wiki/Partition_of_sums_of_squares – relevant?

# Compute and take a step.

g, count = utils.batched_weighted_sum(

noisy_returns[:, 0] - noisy_returns[:, 1],

(self.noise.get(index, self.policy.num_params)

for index in noise_idx),

batch_size=min(500, noisy_returns[:, 0].size))

g /= noise_idx.size

# scale the returns by their standard deviation

if not np.isclose(np.std(noisy_returns), 0.0):

g /= np.std(noisy_returns)

assert (g.shape == (self.policy.num_params, )

and g.dtype == np.float32)

# Compute the new weights theta.

theta, update_ratio = self.optimizer.update(-g)

# Set the new weights in the local copy of the policy.

self.policy.set_flat_weights(theta)

# update the reward list

if len(all_eval_returns) > 0:

self.reward_list.append(eval_returns.mean())

def batched_weighted_sum(weights, vecs, batch_size):

total = 0

num_items_summed = 0

for batch_weights, batch_vecs in zip(

itergroups(weights, batch_size), itergroups(vecs, batch_size)):

assert len(batch_weights) == len(batch_vecs) <= batch_size

total += np.dot(

np.asarray(batch_weights, dtype=np.float32),

np.asarray(batch_vecs, dtype=np.float32))

num_items_summed += len(batch_weights)

return total, num_items_summedWell anyway. I found another GradNorm.

GradNorm

https://arxiv.org/pdf/1711.02257.pdf – “We present a gradient normalization (GradNorm) algorithm that automatically balances training in deep multitask models by dynamically tuning gradient magnitudes.”

Conclusions

We introduced GradNorm, an efficient algorithm for tuning loss weights in a multi-task learning setting based on balancing the training rates of different tasks. We demonstrated on both synthetic and real datasets that GradNorm improves multitask test-time performance in a variety of

scenarios, and can accommodate various levels of asymmetry amongst the different tasks through the hyperparameter α. Our empirical results indicate that GradNorm offers superior performance over state-of-the-art multitask adaptive weighting methods and can match or surpass the performance of exhaustive grid search while being significantly

less time-intensive.

Looking ahead, algorithms such as GradNorm may have applications beyond multitask learning. We hope to extend the GradNorm approach to work with class-balancing and sequence-to-sequence models, all situations where problems with conflicting gradient signals can degrade model performance. We thus believe that our work not only provides a robust new algorithm for multitask learning, but also reinforces the powerful idea that gradient tuning is fundamental for training large, effective models on complex tasks.

…

The paper derived the formulation of the multitask loss based on the maximization of the Gaussian likelihood with homoscedastic* uncertainty. I will not go to the details here, but the simplified forms are strikingly simple.

(^ https://towardsdatascience.com/self-paced-multitask-learning-76c26e9532d0)

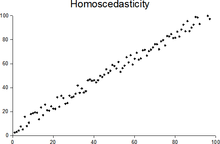

*

In statistics, a sequence (or a vector) of random variables is homoscedastic /ˌhoʊmoʊskəˈdæstɪk/ if all its random variables have the same finite variance. This is also known as homogeneity of variance. The complementary notion is called heteroscedasticity.

(Population Based Training, and Augmented Random Search)

Got a reward of 902 with this robotable. That’s a success. It’s an amusing walk. Still has a way to go, probably.

Miranda doesn’t want to train it with that one dodge ball algorithm you sometimes see, for toughening up AIs. I’ll see about adding in the uneven terrain though, and maybe trying to run that obstacle course library.

But there are other, big things to do, which take some doing.

The egg-scooper, or candler, or handler, or picker-upper will likely use an approach similar to the OpenAI Rubik’s cube solver, with a camera in simulation as the input to a Convolutional Neural Network of some sort, so that there is a transferred mapping, between simulated camera, and real camera.

Also, getting started on Sim-to-Real attempts, of transferring locomotion policies to the RPi robot, seeing if it will walk.

The PBT algorithm changes up the hyperparameters occasionally.

It might be smart to use ensemble or continuous learning by switching to a PPO implementation at the 902 reward checkpoint.

I get the sense that gradient descent becomes more useful once you’ve got past the novelty pitfalls, like learning to step forward instead of falling over. It can probably speed up learning at this point.

DCGAN and PGGAN and PAGAN

GAN – Generative Adversarial Networks

It looks like the main use of GANs, when not generating things that don’t exist, is to generate sample datasets based on real datasets, to increase the sample size of training data for some machine learning task, like detecting tomato diseases, or breast cancer.

The papers all confirm that it generates fake data that is pretty much indistinguishable from the real stuff.

DCGAN – Deep Convolutional GAN – https://arxiv.org/pdf/1511.06434.pdf https://github.com/carpedm20/DCGAN-tensorflow

PGGAN – Progressively Growing GAN – https://arxiv.org/pdf/1710.10196.pdf https://github.com/akanimax/pro_gan_pytorch

PA-GAN – Progressive Attention GAN – https://deepai.org/publication/pa-gan-progressive-attention-generative-adversarial-network-for-facial-attribute-editing – https://github.com/LynnHo/PA-GAN-Tensorflow

Examining the Capability of GANs to Replace Real

Biomedical Images in Classification Models Training

(Trying to generate Chest XRays and Histology images for coming up with new material for datasets)

https://arxiv.org/pdf/1904.08688.pdf –

Interesting difference between the algorithms, like the PGGANs didn’t mess up male and female body halves. Lots of talk about ‘model collapse’ – https://www.geeksforgeeks.org/modal-collapse-in-gans/

Modal Collapse in GANs

25-06-2019

Prerequisites: General Adversarial Network

Although Generative Adversarial Networks are very powerful neural networks which can be used to generate new data similar to the data upon which it was trained upon, It is limited in the sense that that it can be trained upon only single-modal data ie Data whose dependent variable consists of only one categorical entry.

If a Generative Adversarial Network is trained on multi-modal data, it leads to Modal Collapse. Modal Collapse refers to a situation in which the generator part of the network generates only a limited amount of variety of samples regardless of the input. This means that when the network is trained upon a multi-modal data directly, the generator learns to fool the discriminator by generating only a limited variety of data.

The following flow-chart illustrates training of a Generative Adversarial Network when trained upon a dataset containing images of cats and dogs:

The following approaches can be used to tackle Modal Collapse:-

- Grouping the classes: One of the primary methods to tackle Modal Collapse is to group the data according to the different classes present in the data. This gives the discriminator the power to discriminate against sub-batches and determine whether a given batch is real or fake.

- Anticipating Counter-actions: This method focuses on removing the situation of the discriminator “chasing” the generator by training the generator to maximally fool the discriminator by taking into account the counter-actions of the discriminator. This method has the downside of increased training time and complicated gradient calculation.

- Learning from Experience: This approach involves training the discriminator on the old fake samples which were generated by the generator in a fixed number of iterations.

- Multiple Networks: This method involves training multiple Generative networks for each different class thus covering all the classes of the data. The disadvantages include increased training time and typical reduction in the quality of the generated data.

Oh wow so many GANs out there:

PATE-GAN, GANSynth, ProbGAN, InstaGAN, RelGAN, MisGAN, SPIGAN, LayoutGAN, KnockoffGAN

The Ray framework has this Analysis class (and Experiment Analysis class), and I noticed the code was kinda buggy, because it should have handled episode_reward_mean being NaN, better https://github.com/ray-project/ray/issues/9826 (episode_reward_mean is an averaged value, so appears as NaN (Not a Number) for the first few rollouts) . It was fixed a mere 18 days ago, so I can download the nightly release instead.

# pip install -U https://s3-us-west-2.amazonaws.com/ray-wheels/latest/ray-0.9.0.dev0-cp37-cp37m-manylinux1_x86_64.whl

or, since I still have 3.6 installed,

# pip install -U https://s3-us-west-2.amazonaws.com/ray-wheels/latest/ray-0.9.0.dev0-cp36-cp36m-manylinux1_x86_64.whl

Successfully installed aioredis-1.3.1 blessings-1.7 cachetools-4.1.1 colorful-0.5.4 contextvars-2.4 google-api-core-1.22.0 google-auth-1.20.0 googleapis-common-protos-1.52.0 gpustat-0.6.0 hiredis-1.1.0 immutables-0.14 nvidia-ml-py3-7.352.0 opencensus-0.7.10 opencensus-context-0.1.1 pyasn1-0.4.8 pyasn1-modules-0.2.8 ray-0.9.0.dev0 rsa-4.6

https://github.com/openai/gym/issues/1153

Phew. Wheels. Better than eggs, old greybeard probably said. https://pythonwheels.com/

What are wheels?

Wheels are the new standard of Python distribution and are intended to replace eggs.

Yee hah!

DSO: Direct Sparse Odometry

It seems I didn’t mention this algorithm, but it’s a SLAM-like point cloud thing I must have played with, as I came across this screenshot

Tips section from https://github.com/JakobEngel/dso

Accurate Geometric Calibration

- Please have a look at Chapter 4.3 from the DSO paper, in particular Figure 20 (Geometric Noise). Direct approaches suffer a LOT from bad geometric calibrations: Geometric distortions of 1.5 pixel already reduce the accuracy by factor 10.

- Do not use a rolling shutter camera, the geometric distortions from a rolling shutter camera are huge. Even for high frame-rates (over 60fps).

- Note that the reprojection RMSE reported by most calibration tools is the reprojection RMSE on the “training data”, i.e., overfitted to the the images you used for calibration. If it is low, that does not imply that your calibration is good, you may just have used insufficient images.

- try different camera / distortion models, not all lenses can be modelled by all models.

Photometric Calibration

Use a photometric calibration (e.g. using https://github.com/tum-vision/mono_dataset_code ).

Translation vs. Rotation

DSO cannot do magic: if you rotate the camera too much without translation, it will fail. Since it is a pure visual odometry, it cannot recover by re-localizing, or track through strong rotations by using previously triangulated geometry…. everything that leaves the field of view is marginalized immediately.

Tensorboard

Tensorboard is TensorFlow’s graphs website at localhost:6006

tensorboard –logdir=.

tensorboard –logdir=/root/ray_results/ for all the experiments

I ran the ARS algorithm with Ray, on the robotable environment, and left it running for a day with the UI off. I set it up to run Tune, but the environments are 400MB of RAM each, so it’s pretty close to the 4GB in this laptop, so I was only running a single experiment.

So the next thing is to get it to start play back from a checkpoint.

(A few days pass, the github issue I had was something basic, that I thought I’d checked.)

So now I have a process where it’s running 100 iterations, then uses the best checkpoint as the starting policy for the next 100 iterations. Now it might just be wishful thinking, but i do actually see a positive trend through the graphs, in ‘wall’ view. There’s also lots of variation of falling over, so I think we might just need to get these hyperparameters tuning. (Probably need to tweak reward weights too. But lol, giving AI access to its own reward function… )

Just a note on that, the AI will definitely just be like, *999999

After training it overnight, with the PBT & ARS, it looks like one policy really beat out the other ones.

It ran a lot longer than the others.

Habitat-Sim

https://github.com/facebookresearch/habitat-sim

A flexible, high-performance 3D simulator with configurable agents, multiple sensors, and generic 3D dataset handling (with built-in support for MatterPort3D, Gibson, Replica, and other datasets