Dot and cross products

Just a reminder of what these mean.

Dot Product

Dot product is a scalar magnitude. You take the X components of both and multiply them together, and take the Y components of both and multiply them together, and then add those.

Algebraically, the dot product is the sum of the products of the corresponding entries of the two sequences of numbers. Geometrically, it is the product of the Euclidean magnitudes of the two vectors and the cosine of the angle between them. These definitions are equivalent when using Cartesian coordinates.

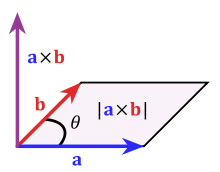

The Cross Product

The cross product a × b is defined as a vector c that is perpendicular (orthogonal) to both a and b, with a direction given by the right-hand rule and a magnitude equal to the area of the parallelogram that the vectors span.

Understanding MotorDemo.cpp (Part 2)

Since this is the meaty bits where the robot is configured, we need to understand links and joints first. Here is the bit from the docs:

Ok so that’s like the basics, which is pretty confusingly worded here. But basically, because the body isn’t counted as a link, you have the same number of links as joints, since you add a joint and link, each time you add a new body part.

Maybe good to recall the 6 degrees of freedom:

So the one function is localCreateRigidBody,

you can follow along in the code (We’re looking at TestRig) here:

https://github.com/bulletphysics/bullet3/blob/master/examples/DynamicControlDemo/MotorDemo.cpp

Takes mass, startTransform, and shape.

If mass is not 0, it is ‘dynamic’. If it’s dynamic, calculate the local inertia on the shape, using the mass and local inertia.

The default motion state uses the startTransform

The rigid body construction info takes mass, motion state, shape and local inertia. body is then pointing to the rigid body created using the construction info.

The rigid body is added to the world, and returned.

So the Test Rig takes the world, a vector position offset and a boolean of whether it is fixed or not.

The comment says // Setup geometry

Body size is 0.25, legs length are 0.45, foreleg lengths are 0.75 long.

shapes[0] is a capsule shape of ‘body size’ radius, and 0.1 scalar height. Then it iterates through the number of legs, adding in shapes[i] as leg capsules with .1 radius and leg length, and shapes[i+1] as foreleg capsules with .08 radius and foreleg length.

Next is // Setup rigid bodies

It just sets up the offset origin.

Next is // root

Root’s vector is set as 0.5 height, and a transform set up with origin at root. If the ‘fixed’ variable is set, the root body is set up with (mass, startTransform, and shape) of 0, offset*transform, and the capsule shape set up just now. If ‘fixed’ variable is not set, the mass is 1, same place, same shape.

Next is // legs

This bit is complicated. Angle is 2*PI*i/NUM_LEGS, to evenly distribute the legs around the root body. fSin is the sin (opposite over hypotenuse), and fCos is the cos (adjacent over hypotenuse).

So, set transform to identity. That just means like 1,1,1. Multiply a vector by identity and you get the vector, I think.

vBoneOrigin is a vector:

X: cos (bodysize + 0.5*leg length),

Y: 0.5,

Z: sin (bodysize + 0.5*leg length)

then set the transform to this vector. So, this is to find where the joint is, between the body, and the thigh. Same starting height for all legs, but X and Z plane goes around in circle.

// thigh

vToBone = (vBoneOrigin – vRoot).normalize()

Normalizing makes a unit vector (changes length to 1). So Bone Origin minus Root, normalized, means a unit vector in the direction of root, from the new thigh joint.

vAxis = vToBone.cross(vUp)

where vUp is a unit vector on the y axis.

transform.setRotation(btQuaternion(vAxis, M_PI_2));

Ok dunno. So… cross product is like a normal vector, perpendicular to both vToBone, and vUp, using a right hand rule. Both are unit vectors. So it’s like a weird sideways vector. Ok but that is called vAxis. AH. ok, so the axis is the freaking axis to rotate around. Duh. And it rotates on that axis, by PI/2. By 90 degrees.

The quaternion is just the mathematical system rotating it by 90 degrees. It must have rotated the transform to point downwards.

m_bodies[1 + 2 * i] = localCreateRigidBody(btScalar(1.), offset * transform, m_shapes[1 + 2 * i]);

Ok so that makes the thigh leg bits I guess.

// shin

transform.setIdentity();

transform.setOrigin(btVector3(btScalar(fCos * (fBodySize + fLegLength)), btScalar(fHeight – 0.5 * fForeLegLength), btScalar(fSin * (fBodySize + fLegLength))));

m_bodies[2 + 2 * i] = localCreateRigidBody(btScalar(1.), offset * transform, m_shapes[2 + 2 * i]);

So transform is moved to

X: cos (body + leg)

Y: height – 0.5*foreleg,

Z: sin (body + leg),

Ok so we already rotated 90 degrees, and now we have to move the transform to the axis for the foreleg. So the Y axis is the same for all of them, and then when you have cos and sin, that makes a circle around the y axis, at the (body+leg) distance. That might just make sense.

// Setup some damping on the m_bodies

setDamping, setDeactivationTime, setSleepingThresholds must be so the robot chills for a bit and acts a bit more earth-like.

Ok and finally

// Setup the constraints

Iterates through NUM_LEGS, same angle iteration.

//Hip joints first. (i.e. Between Body and Thigh)

localA.setIdentity();localB.setIdentity();

localA.getBasis().setEulerZYX(0, -fAngle, 0);

localA.setOrigin(btVector3(btScalar(fCos * fBodySize), btScalar(0.), btScalar(fSin * fBodySize)));

localB = m_bodies[1 + 2 * i]->getWorldTransform().inverse() * m_bodies[0]->getWorldTransform() * localA;

hingeC = new btHingeConstraint(*m_bodies[0], *m_bodies[1 + 2 * i], localA, localB);

hingeC->setLimit(btScalar(-0.75 * M_PI_4), btScalar(M_PI_8));

m_joints[2 * i] = hingeC;

m_ownerWorld->addConstraint(m_joints[2 * i], true);

Ok so A is rotated around Y axis by the current angle, and A is moved to bodySize outwards.

OK so for B… the inverse of the leg’s ‘world transform’, times the body’s ‘world transform’, times A. The body is presumably like sitting up straight, and A rotates it about Y, and then the inverse of the leg’s transform makes it point back towards origin.

And then the hinge constraint is set up with body and thigh, A and B. Limits set to -.75*PI/4 to PI/8. Apparently -33.75 degrees to 22.5 degrees.

//knee joints

localA.setIdentity();localB.setIdentity();localC.setIdentity();

localA.getBasis().setEulerZYX(0, -fAngle, 0);

localA.setOrigin(btVector3(btScalar(fCos * (fBodySize + fLegLength)), btScalar(0.), btScalar(fSin * (fBodySize + fLegLength))));

localB = m_bodies[1 + 2 * i]->getWorldTransform().inverse() * m_bodies[0]->getWorldTransform() * localA;

localC = m_bodies[2 + 2 * i]->getWorldTransform().inverse() * m_bodies[0]->getWorldTransform() * localA;

hingeC = new btHingeConstraint(*m_bodies[1 + 2 * i], *m_bodies[2 + 2 * i], localB, localC);

hingeC->setLimit(btScalar(-M_PI_8), btScalar(0.2));

m_joints[1 + 2 * i] = hingeC;

m_ownerWorld->addConstraint(m_joints[1 + 2 * i], true);

And now, same story but between leg and foreleg, from -22.5 degrees to 11.4 degrees.

Anyway, I get the idea.

Understanding MotorDemo.cpp (Part 1)

Going through the Bullet3 physics engine example code for ‘MotorDemo’. It’s a hexapod looking robot flexing its joints. The folder is bullet3/examples/DynamicControlDemo/

https://github.com/bulletphysics/bullet3/tree/master/examples/DynamicControlDemo

OK so there’s the .h file containing

#ifndef MOTORDEMO_H #define MOTORDEMO_H class CommonExampleInterface* MotorControlCreateFunc(struct CommonExampleOptions& options); #endif

C++ declares the function in .h files so that when compiling, all the .cpp files know about each other, without necessarily caring about the implementation details.

So this function is called ‘MotorControlCreateFunc’, which returns a pointer to a CommonExampleInterface, and takes a reference to CommonExampleOptions. Nice OOPy programming.

Let’s get into the CPP file.

Ok variables are:

time, cycle period, muscle strength, and an array of TestRig objects called rig

Constructor takes a pointer to a GUIHelperInterface. Destructor does no cleaning up.

They define an ‘initPhysics‘ and ‘exitPhysics‘. exitPhysics seems to have all the destructor code freeing up memory.

And three functions, spawnTestRig, setMotorTargets, and resetCamera.

define NUM_LEGS 6 define BODYPART_COUNT 2 * NUM_LEGS + 1 define JOINT_COUNT BODYPART_COUNT - 1

Looks like we can change some settings maybe. Will play with that later. So a leg had 2 body parts, plus there’s the central ‘body’ of the critter.

Now they define the TestRig class

btDynamicsWorld* m_ownerWorld;btCollisionShape* m_shapes[BODYPART_COUNT]; btRigidBody* m_bodies[BODYPART_COUNT]; btTypedConstraint* m_joints[JOINT_COUNT];

TestRig consists of a world, collision shapes, bodies and joints.

Let’s look at the rest of the code, because most of the code takes place in TestRig.

So as mentioned before, initPhysics and exitPhysics set up and shut down the physics engine gui.

So this code will be heavily copy-pasted. In this case, it sets up the 3d world, makes a ground, and spawns two test rigs. (Spider things), with a small ‘start offset’ vector from each other. They are pushed onto the rigs stack variable.

setMotorTargets takes a time as a variable. The comment is

// set per-frame sinusoidal position targets using angular motor (hacky?)

So it iterates over the number of rigs, and then twice per NUM_LEGS, sets up target hinge variables, for the animation.

Per hinge, it gets the current angle, sets the target percentage as time divided by 1000, mod the cycle period, divided by the cycle period. and then sets the target angle as 0.5 * (1 + sin (2*PI*target percentage)). Ok…

Target Limit Angle is the hinge’s lower limit, plus the ( target angle multiplied by the ( upper limit – lower limit)). Ok…

Angle Error is the target limit angle minus the current angle.

DesiredAngularVelocity is angle error / time, which is passed to each hinge’s enableAngularMotor function.

I’d be lying if I said I understood entirely. But this is pretty much the basis of the program.

void motorPreTickCallback(btDynamicsWorld* world, btScalar timeStep)

{

MotorDemo* motorDemo = (MotorDemo*)world->getWorldUserInfo();

motorDemo->setMotorTargets(timeStep);

}

It basically sets all the motor targets, based on the time that has passed, using some sort of sin-wave and the flexing-limits of the legs

Ok. More later.

The instructions in the wiki of pyBullet got me thinking, that I could use a proper IDE on the linux machine. I’m too old to learn emacs, and I’m not doing this in vi.

pyCharm is pretty good for Python.

But the Bullet Engine examples are all in C++, so I went with Eclipse CDT https://www.eclipse.org/cdt/ for a nicer experience.

So Eclipse needs Java. So I installed java with

sudo apt install openjdk-8-jdk

Unpacked gzip tar file and deleted it

tar xvf eclipse-cpp-2020-03-R-incubation-linux-gtk-x86_64.tar.gz

rm eclipse-cpp-2020-03-R-incubation-linux-gtk-x86_64.tar.gz

./opt/eclipse/eclipse

pyBullet

Let’s get back to the main thing we wanted to look at.

pip3 install pybullet –upgrade –user

cd /opt

git clone https://github.com/bulletphysics/bullet3.git

cd bullet3/

cd build3/

cmake -G”Eclipse CDT4 – Unix Makefiles” -DCMAKE_BUILD_TYPE=Release -DBUILD_BULLET2_DEMOS=ON -DBUILD_CPU_DEMOS=ON -DBUILD_BULLET3=ON -DBUILD_OPENGL3_DEMOS=ON -DBUILD_EXTRAS=ON -DBUILD_SHARED_LIBS=ON -DINSTALL_EXTRA_LIBS=ON -DUSE_DOUBLE_PRECISION=ON ..

make

make install

ldconfig

ok that installs it and builds the examples but seems to be for Eclipse CDT. Not sure I need a C++ IDE.

ok so i was supposed to do this for cmake:

./build_cmake_pybullet_double.sh

But anyway,

cd /examples/RobotSimulator

./App_RobotSimulator

COOL.

Ok lets run build_cmake_pybullet_double.sh

ok there we go

cd ./build_cmake/examples/ExampleBrowser

./App_ExampleBrowser

OK this is great.

copied from this dude https://medium.com/teamarimac/integrating-sonar-and-ir-sensor-plugin-to-robot-model-in-gazebo-with-ros-656fd9452607

what is ROS?

ThiruVenthanFollowDec 7, 2018 · 5 min read

Robotics operating system (ROS) is an open sourced robotic middle ware licensed under the open source, BSD license. ROS provides services like communication between progress, low level device control, hardware abstraction, package management and visualization tools for debugging. ROS based progress can be represented as graph where process happens in nodes and node communicate with others to execute the overall progress.

what is Gazebo?

Gazebo is a robotics simulator which allows to simulate and test our algorithm in indoor and outdoor environment. Some of the great features of Gazebo simulator are Advance 3D visualization , support to various physics engines (ODE, Bullet, Simbody, and DART) and the ability to simulate the sensor with noise etc., which ultimately results in a more realistic simulation results

Requirements

- Computer with Ubuntu 16.04.5 LTS

- ROS (Kinetic) installed and a basic understanding about ROS (tutorials)

- Gazebo_ros package installed.

- A catkin work space with robot URDF and world files. (a sample workspace : git clone https://thiruashok@bitbucket.org/thiruashok/rover_ws.git

Launching the Gazebo with the robot model

Go to the cloned directory and open the terminal (ctrl+alt+t) and run the following commands.

cd rover_ws

catkin_make

source devel/setup.bash

roslaunch rover_gazebo rover_world.world

You can see a robot in a simulation world, in gazebo as shown in figure below.

Open another terminal and run the following command to see the available topics.

rostopic list

You will get the following as the output.

/clock

/cmd_vel

/gazebo/link_states

/gazebo/model_states

/gazebo/parameter_descriptions

/gazebo/parameter_updates

/gazebo/set_link_state

/gazebo/set_model_state

/gazebo_gui/parameter_descriptions

/gazebo_gui/parameter_updates

/joint_states

/odom

/rosout

/rosout_agg

/tf

/tf_static

From the results you obtained you can observe that there are no any topic related to sensors, but cmd_vel topic is available, so we can navigate the robot by sending commands (given below) to this topic. As robot is now using differential drive mechanism, by changing the linear x and angular z values you can move the robot around.

rostopic pub /cmd_vel geometry_msgs/Twist "linear:

x: 1.0

y: 0.0

z: 0.0

angular:

x: 0.0

y: 0.0

z: 0.0"

Adding sonar and IR sensor models to the robot model

Open the rover.xarco file in the rover_ws/src/rover_description directory, using your favorite text editor. Add the following code above “ </robot>” tag.

<joint name="ir_front_joint" type="fixed">

<axis xyz="0 1 0" />

<origin rpy="0 0 0" xyz="0.5 0 0" />

<parent link="base_footprint"/>

<child link="base_ir_front"/>

</joint><link name="base_ir_front">

<collision>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.01 0.01 0.01"/>

</geometry>

</collision> <visual>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.01 0.01 0.01"/>

</geometry>

</visual> <inertial>

<mass value="1e-5" />

<origin xyz="0 0 0" rpy="0 0 0"/>

<inertia ixx="1e-6" ixy="0" ixz="0" iyy="1e-6" iyz="0" izz="1e-6" />

</inertial>

</link><joint name="sonar_front_joint" type="fixed">

<axis xyz="0 1 0" />

<origin rpy="0 0 0" xyz="0.5 0 0.25" />

<parent link="base_footprint"/>

<child link="base_sonar_front"/>

</joint><link name="base_sonar_front">

<collision>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.01 0.01 0.01"/>

</geometry>

</collision> <visual>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.01 0.01 0.01"/>

</geometry>

</visual> <inertial>

<mass value="1e-5" />

<origin xyz="0 0 0" rpy="0 0 0"/>

<inertia ixx="1e-6" ixy="0" ixz="0" iyy="1e-6" iyz="0" izz="1e-6" />

</inertial>

</link>

The above lines of code integrate senor models (a simple square) to the robot model. For some basic understanding of URDF file of a robot refer this. Now launch the world again by the following code.

roslaunch rover_gazebo rover_world.world

By changing the origin rpy and xyz values within the joint tag the sensor position can be changed.

<origin rpy=”0 0 0" xyz=”0.5 0 0.25" />

You can notice that the sensor model is now visible on top of the robot model.

Adding the sensor plugin for Sonar and IR

Gazebo_ros_range plugin can be used to model both the sonar and the IR sensor. This plugin publish messages according to sensor_msgs/Range Message format so that integration to ros can be easily done. To add the plugin to the open the rover_ws/src/rover_description/urdf/rover.gazebo file in your favorite text editor and add the following lines above “ </robot>” tag.

<gazebo reference="base_ir_front">

<sensor type="ray" name="TeraRanger">

<pose>0 0 0 0 0 0</pose>

<visualize>true</visualize>

<update_rate>50</update_rate>

<ray>

<scan>

<horizontal>

<samples>10</samples>

<resolution>1</resolution>

<min_angle>-0.14835</min_angle>

<max_angle>0.14835</max_angle>

</horizontal>

<vertical>

<samples>10</samples>

<resolution>1</resolution>

<min_angle>-0.14835</min_angle>

<max_angle>0.14835</max_angle>

</vertical>

</scan>

<range>

<min>0.01</min>

<max>2</max>

<resolution>0.02</resolution>

</range>

</ray>

<plugin filename="libgazebo_ros_range.so" name="gazebo_ros_range">

<gaussianNoise>0.005</gaussianNoise>

<alwaysOn>true</alwaysOn>

<updateRate>50</updateRate>

<topicName>sensor/ir_front</topicName>

<frameName>base_ir_front</frameName>

<radiation>INFRARED</radiation>

<fov>0.2967</fov>

</plugin>

</sensor>

</gazebo>

The avove one is for the IR, you can simply copy paste and this again and set the gazebo reference to base_sonar_front and change the topicName and frameName to appropriate one. Now run launch the gazebo.

roslaunch rover_gazebo rover_world.world

Sonar and IR senor rays can be seen in the simulation world. To see the sensor reading superscribe to the appropriate topic. see the commands bellow

rostopic list

Now the output will be like this:

/clock

/cmd_vel

/gazebo/link_states

/gazebo/model_states

/gazebo/parameter_descriptions

/gazebo/parameter_updates

/gazebo/set_link_state

/gazebo/set_model_state

/gazebo_gui/parameter_descriptions

/gazebo_gui/parameter_updates

/joint_states

/odom

/rosout

/rosout_agg

/sensor/ir_front

/sensor/sonar_front

/tf

/tf_static

You could notice that sonar and IR sensor are publishing to the new topics namely, /senor/ir_front and /sensor/sonar_front.

rostopic echo /sensor/ir_front

Type the above code in a the terminal to observe the out put from the IR sensor

header:

seq: 1

stamp:

secs: 1888

nsecs: 840000000

frame_id: "base_ir_front"

radiation_type: 1

field_of_view: 0.296700000763

min_range: 0.00999999977648

max_range: 2.0

range: 0.0671204701066

---

header:

seq: 2

stamp:

secs: 1888

nsecs: 860000000

frame_id: "base_ir_front"

radiation_type: 1

field_of_view: 0.296700000763

min_range: 0.00999999977648

max_range: 2.0

range: 0.0722889602184

---

header:

seq: 3

stamp:

secs: 1888

nsecs: 880000000

frame_id: "base_ir_front"

radiation_type: 1

field_of_view: 0.296700000763

min_range: 0.00999999977648

max_range: 2.0

range: 0.0635933056474

Like this, all the sensor (Lydar , camera, IMU) can be integrated to the robot model. This help a lot in validating the algorithm and finding the optimal sensor position without building the actual hardware fully.Arimac

The Official Blog of Arimac

54

Thanks to Ampatishan Sivalingam.

As an interlude from checking out Blender/phobos, I saw that the big free robot technology effort of the 2010s was probably ROS, the robot operating system, and as it has been around for a long time, it has had its own established ways, in the pre-phobos days. Before these kids could just click their doodads and evolve silicon lifeforms when they felt like it.

No, phobos documentation is actually just a bit terse, so let’s watch some Gazebo videos. This dude just jumps in, expecting you to have things set up. https://stackoverflow.com/questions/41234957/catkin-command-not-found but this guy and me “Probably forgot to set up the environment after installing ROS.

So yeah, what the hell is catkin? So, it’s like how ruby on rails wants you to ask it to make boilerplate for you. So we had to run:

0 mkdir chicken_project

1 cd chicken_project/

2 ls (nothing here, boss)

3 mkdir src

4 cd src

5 catkin_init_workspace

6 cd ..

7 catkin_make

8 source /opt/ros/chicken_project/devel/setup.bash

So, starting 53 seconds in:

Ok so his time guesstimate is a shitload of time. We’re going to go for the shoot first approach. Ok he copy-pastes some code, and apparently you have to copy it from the youtube video. No thanks.

So this was his directory structure, anyway.

cd src

catkin_create_pkg my_simulations

cd my_simulations/

mkdir launch

cd launch/

touch my_world.launch

cd ..

mkdir world

cd world

touch empty_world.world

That is funny. Touch my world. But he’s leading us on a wild goose chase. We can’t copy paste from youtube, dumbass.

Robot Operating System installation http://wiki.ros.org/melodic/Installation/Ubuntu

The geometric model describes the geometric relationships that specify the spatial extent of a given component.

From this website: https:

Kinematics is the study of motion of bodies without regard to the forces that cause the motion. Dynamics on the other hand is the study of the motion of bodies due to applied forces (think F=ma). For example consider orbital mechanics: Kepler’s Laws are kinematic, in that they describe characteristics of a satellite’s orbit such as its eliptical shape without considering the forces that cause that motion, whereas Newton’s Law of Gravity is dynamic as it incorporates the force of gravity to describe why the orbit is eliptical.